The exponential data volume, variety, and velocity growth have increased the significance of efficient, reliable, and scalable data refinement processes in the modern data landscape. These processes involve cleansing, transforming, and aggregating data to be analyzed and are the backbone of accurate and reliable data analytics. Also known as Extract, Load, Transform (ELT) processes, they underscore the need for a practical framework.

This article dives into Snowflake, the cloud data platform, and the unique abilities of Snowflake to execute procedures and tasks natively within its ecosystem, enabling new ways of ELT integration. Encora's library of ELT integration frameworks and processes has enabled our Snowflake-based customers to leverage Snowflake's full potential in terms of efficient, reliable, scalable data processing and cost savings.

This article discusses the domain of integrated ELT. Specifically, it focuses on leveraging Snowflake's procedural capabilities and task automation for constructing a dynamic ELT orchestration framework. The exploration involves comparing the advantages of a native Snowflake approach and the conventional ETL tools that operate externally. The crux of this exploration lies in the science of data transformation, where the ultimate destination bears equal weight to the enterprises' data strategy journey itself. The outcome: a sturdy ELT framework that can be a potential game changer in Snowflake-based data-driven initiatives.

Snowflake's Native ELT Capabilities

The native execution within the Snowflake ecosystem capitalizes on the platform's unique architecture to process queries efficiently, taking advantage of isolated compute resources, optimized storage, and intelligent query optimization techniques.

ELT refers to a data integration approach where data is extracted from source systems, loaded into a data warehouse, and finally transformed within the warehouse.

Here's how ELT processes can leverage Snowflake procedures and tasks:

Stored Procedures

Snowflake allows you to create stored procedures using SQL, JavaScript, and Python. These procedures can encapsulate complex data transformation logic. When it comes to ELT processes, you can create stored procedures to perform the transformation part of the process.

For example, you may have extracted raw data from source systems and loaded it into staging tables within Snowflake. A stored procedure can then be designed to transform the data in these staging tables into the desired format for analysis. This could involve cleaning, aggregating, joining, and reshaping the data as needed.

An example of a stored procedure is below:

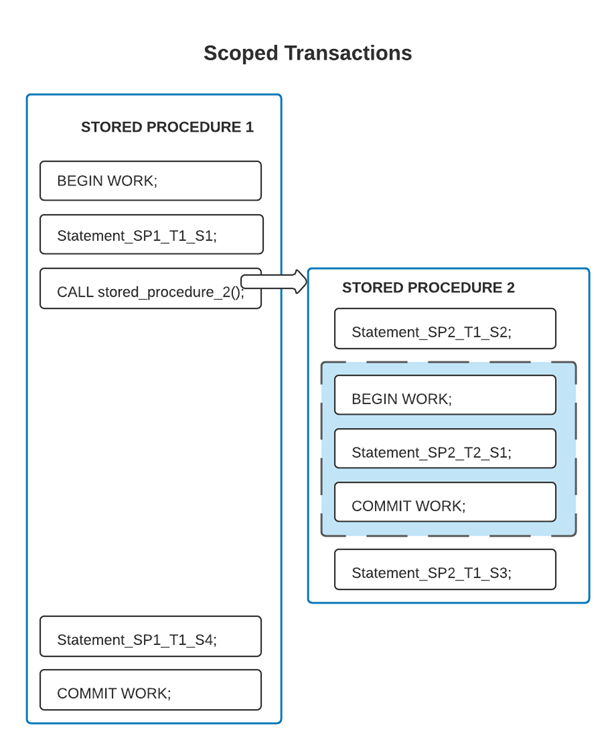

https://docs.snowflake.com/en/sql-reference/transactions#scoped-transactions

Using stored procedures for ELT offers distinct advantages: they encapsulate logic, simplify maintenance, ensure transaction scope, provide procedural language support, allow us to call out other stored procedures, and systematically organize transformation steps. Moreover, these procedures can be easily automated through scheduling.

Tasks

Snowflake tasks that can execute SQL statements, including calling stored procedures, at scheduled intervals or based on specific triggers. Tasks often automate recurring data processing activities, making them well-suited for ELT orchestration processes.

Stored Procedures and Tasks for building data pipelines

For ELT, Stored Procedures and Tasks can automate the entire data pipeline. Here's a high-level breakdown of how tasks can be used in ELT:

- Extraction: If the source systems provide data through APIs or other mechanisms, tasks can trigger data extraction at specific intervals. This could involve calling external APIs and storing the extracted data in staging tables.

- Loading: Once data is available in staging, another task can load the data from staging tables to destination tables within the data warehouse.

- Transformation: As mentioned earlier, tasks can execute stored data transformation procedures. These procedures can be part of the transformation step in the ELT process.

- Scheduling: Snowflake tasks can run at specific times to process the data.

- Combining stored procedures and tasks allows you to design an end-to-end ELT process within the Snowflake ecosystem. This approach offers advantages like automation, maintainability, and consistency in data processing. It also allows for near real-time ELT capabilities using scheduling flexibility.

Native Snowflake vs. Conventional ETL Tools

Native Snowflake and conventional ETL tools are used for data integration and transformation. However, they have some key differences.

|

No |

Snowflake Native ELT Solution |

Conventional ETL Tools |

|

1 |

Native Snowflake refers to the built-in capabilities within the Snowflake data platform for data integration and transformation. |

Conventional ETL tools are third-party software applications designed for data integration and transformation. |

|

2 |

It eliminates the need for additional ETL tools, reducing complexity and potential integration issues. |

Conventional ETL tools offer a more comprehensive range of features and functionalities than Native Snowflake. |

|

3 |

Without external tools, you can perform TL (Transform, Load) operations directly within the Snowflake environment. |

These tools often provide a visual interface for designing and managing ETL workflows, allowing you to extract data from various sources, transform it according to your requirements, and load it into a target destination. |

|

4 |

Leverage Snowflake's powerful processing capabilities and scalability to handle large volumes of data |

It may take a significant amount of time and resources to handle large volumes of data. |

Ultimately, deciding between Native Snowflake and conventional ETL tools depends on your specific requirements and preferences.

Encora has, over the past few years, built mature data integration capabilities in terms of ETL/ELT frameworks, reusable data pipeline components, data migration accelerators, etc. Encora has been helping its customers build efficient, reliable, scalable, and reusable ETL/ELT processes within Snowflake or by using conventional ETL tools.

Key Benefits of ELT Processes Within Snowflake

- Reduced Data Movement: Traditional ETL processes involve transforming data between systems before loading it into the data warehouse. Snowflake's ELT processes minimize data movement by performing transformations directly within the platform and eliminating ELT tools' external computing resource requirements.

- Scalability and Performance: Snowflake's architecture enables independent scaling of compute and storage resources, ensuring optimal performance for ELT processes with large datasets and complex transformations.

- Concurrent Processing: Snowflake efficiently handles concurrent queries and tasks with automatic resource allocation and workload management, enabling multiple ELT processes to run concurrently without performance impacts.

- Unified Platform: Performing ELT processes in Snowflake simplifies data management by allowing extraction, loading, and transformation within a single platform.

- Data Transformation Flexibility: Snowflake enables complex SQL queries and stored procedures for various data transformations. Data engineers can utilize standard SQL functions, advanced analytical functions, and custom logic to meet their requirements.

- Data Governance and Security: Snowflake provides comprehensive security features and data governance controls. You can maintain consistent security practices throughout the data lifecycle by performing ELT processes within the platform. Additionally, you can define access controls, encryption, and auditing mechanisms to ensure data integrity and compliance.

- Integrated Analytics: Snowflake offers storage and powerful analytics capabilities, allowing seamless integration and immediate analysis without moving data to a separate environment. Thus, data pipelines can broaden their scope from being only ELT pipelines to end-to-end analytics or AI/ML pipelines right within Snowflake.

- Automation and Scheduling: Snowflake allows you to automate ELT processes with scheduled or triggered tasks, ensuring data freshness and reliable processing while reducing manual intervention.

- Reduced Latency: Data transformations happen within the same platform as data storage, resulting in minimal latency caused by data movement. This is especially advantageous for obtaining real-time or near-real-time data insights.

- Operational Efficiency: Centralizing ELT processes within Snowflake simplifies data integration, reduces complexity, and improves maintenance and troubleshooting due to the unified platform.

- Cost Optimization: The native Snowflake ELT framework eliminates the need for external tools, infrastructure, and maintenance cycles.

Constructing an ELT Framework

Integrating Snowflake's procedural capabilities and task automation creates a streamlined and automated workflow for your ELT processes. This approach minimizes manual intervention, ensures data consistency, and takes advantage of Snowflake's performance and scalability features.

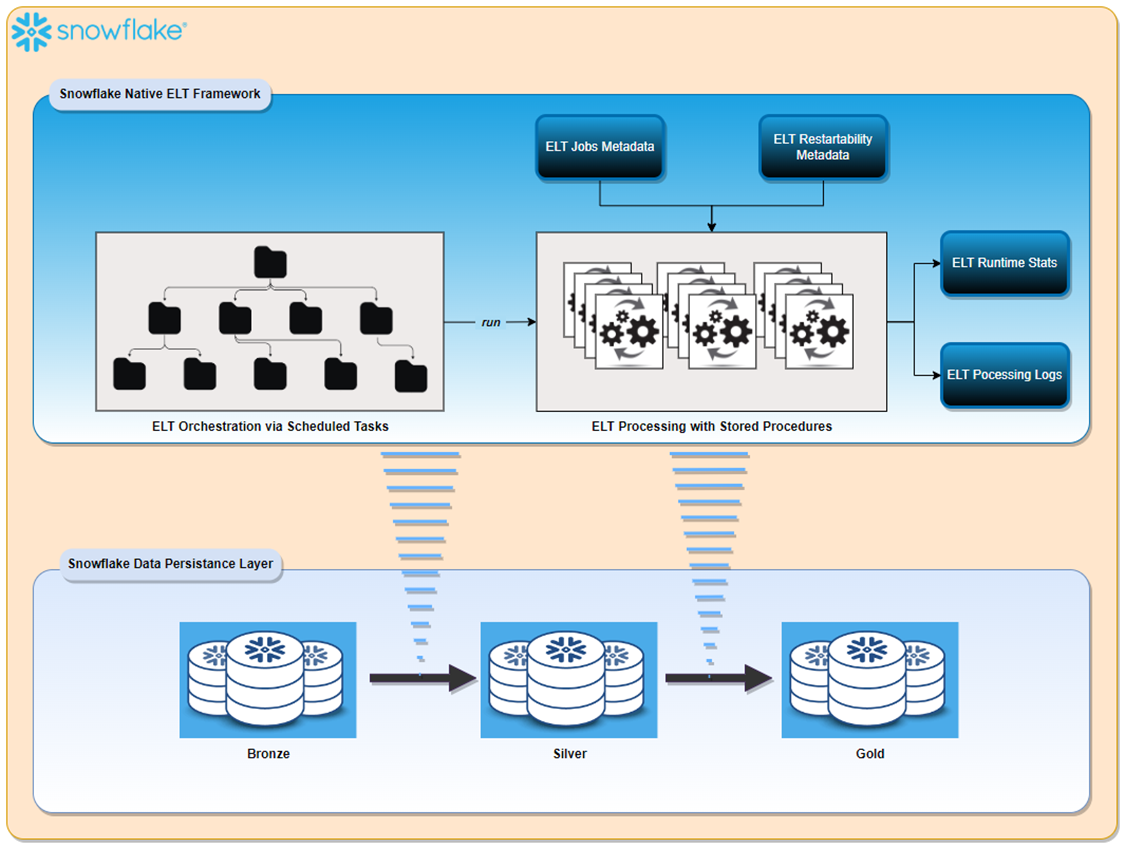

The Dynamic ELT framework shown below has the following features:

- Exception Handling and Reporting: The framework handles failures of any ELT jobs and logs errors appropriately in error tables for further analysis.

- Support for Restarting Failed Jobs: The restart ability of jobs eliminates data gaps and data duplication.

- ELT Processing Logs: The ELT execution logs are maintained in different auditing tables to investigate data or data transformation issues.

- Dynamic Scheduling: Snowflake Tasks offer several different ways of scheduling and dependency tracking.

- Reusable Framework: The ELT pipelines are based on metadata. Adding new jobs or making changes to existing jobs is a matter of configuring metadata. Also, due to the configuration-based structure, the same framework can be used for driving different data transformation needs. E.g., Bronze to Silver or Silver to Gold.

- ELT Run Time Stats: Capturing run time statistics of ELT jobs allows for analysis of all the past ELT runs and gives visibility into future resource needs and performance tuning opportunities.

Snowflake Features for Efficient and Maintainable ELT Pipelines

Snowflake's features are well-suited to contribute to developing a flexible and scalable ELT (Extract, Load, Transform) framework. Here's how various aspects of Snowflake's architecture and capabilities can be harnessed to build such a framework:

- Separation of Compute and Storage: Snowflake's architecture separates the compute resources from storage, allowing for independent scaling, elasticity, and scalability. This enables appropriate resource allocation for data processing and transformation tasks.

In an ELT framework, compute resources can scale to handle varying workloads, ensuring optimal performance during data processing.

- Virtual Data Warehouses (VDWs): Snowflake's Virtual Data Warehouses (VDWs) allow you to create dedicated compute resources for different workloads. You can allocate VDWs for specific transformation tasks in an ELT framework, ensuring isolation and performance optimization. This also supports workload isolation, preventing resource contention between different processes. Horizontal and vertical scaling are the approaches for managing and optimizing the performance of data warehouses.

- Snowflake Horizontal Scaling: Snowflake's architecture allows us to add or remove nodes as required, leading to improved performance and the ability to handle larger workloads.

- Snowflake Vertical Scaling: Snowflake's vertical scaling is useful when the workloads require more computational power or memory but may not necessarily benefit from many nodes. It involves increasing the resources of individual nodes. In a native ELT framework, it becomes very easy to scale Snowflake warehouses, horizontally or vertically, depending on the compute resources needed.

- Staging and Raw Data Storage: Snowflake's support for staging tables allows you to store and manage raw data before transformation. Staging tables provide a buffer between source and transformed data, allowing you to validate, cleanse, and preprocess data before loading it into the data warehouse. This flexibility ensures data quality and consistency. Snowflake allows different table types for defining staging tables. Temporary/Transient tables are usually suitable for landing data that needs to live only for a short duration of time.

- Result Set Caching: Snowflake's result set caching feature can enhance the performance of repeatable queries. In an ELT framework, if specific transformation queries are executed, caching can speed up these queries, reducing the need for repeated data processing.

- Data Sharing and Collaboration: Snowflake's data sharing capabilities allow you to share data securely between different accounts and organizations. This is valuable in a scalable ELT framework where you might need to collaborate with external partners, customers, or other departments.

Key Takeaways

- Seamless Integration: Leveraging Snowflake's native capabilities for ELT processes eliminates the need for external tools and streamlines workflows.

- Efficiency and Scalability: Snowflake's procedural abilities and task automation enable the construction of a dynamic, efficient, and scalable ELT framework.

- Data Transformation Excellence: The science of data transformation becomes more potent within Snowflake's environment.

- Strategic Advantage: Utilizing a native ELT framework in Snowflake can provide a significant strategic edge in data analytics endeavors.

- Encora’s ELT Framework for data-driven pipelines: Encora has, over the past few years, built mature data integration capabilities in terms of ETL/ELT frameworks, reusable data pipeline components, data migration accelerators etc. Encora has been helping its customers build efficient, reliable, scalable, and reusable ETL/ELT processes within Snowflake or using conventional ETL tools.

About Encora

Fast-growing tech companies partner with Encora to outsource product development and drive growth. Contact us to learn more about our software engineering capabilities.