The metaverse has emerged as an appealing promise that has sparked the imaginations of millions worldwide. Considered a turning point in digital innovation, it allows users to explore an immersive virtual playground and enhances their interactions, collaboration, and originality. By exploring and integrating modern technologies, such as live translations and customized avatars, users can unlock a superior metaverse experience. This article seeks to evaluate the current state of metaverse experiences, highlight ongoing challenges, and explore dormant possibilities.

The Metaverse Experience Thus Far

While the metaverse today provides a superlative visual experience with head-tracking, motion capture, and advanced rendering technologies, the overall experience is still quite limited in scope. Though the number of virtual environments and social spaces is increasing, interactivity and engagement within these environments are still rooted in the physical world. Users are restricted by a narrow range of actions and motions, limiting creativity and expression.

One of the significant impediments to a fully realized metaverse experience is the development of haptic technology. Haptics is the science of touch in human-computer interaction. It includes the design, creation, and application of technology to simulate a sense of touch, enabling deeper immersion in digital spaces.

While haptic feedback technology exists in the market today, we still face substantial challenges in terms of rendering realistic textures, temperature or pressure sensations, and inertia. Consequently, users struggle to "feel" the virtual realm they inhabit, causing a disconnect between their physical and digital experiences. We can consider haptic implementation a herculean task that will take a couple more years to be fully developed.

Nevertheless, there are low-hanging fruits that can deliver quick and significant improvements in metaverse experiences. One such avenue worth exploring is the live translation of voice and text exchanges in virtual spaces.

Powering Up the Metaverse Experience: Live Translations Unchained

Imagine stepping into a virtual meeting, conference, or event and being able to communicate seamlessly with people across the world, irrespective of the languages spoken. The metaverse holds immense potential for connecting individuals worldwide in ways that transcend physical boundaries. However, barriers still exist. Integrating live translations into metaverse experiences would bridge the gap between speakers of different languages, fostering more inclusive and accessible interactions.

Advanced AI-powered language models have grown in leaps and bounds in recent years, delivering real-time accurate translations. These models recognize and convert speech-to-text or text-to-speech, facilitating smooth communication across the metaverse. Expansive language support ensures individuals from various linguistic backgrounds can participate and contribute to the conversation. Furthermore, this feature allows creators to expand their audience reach and make their content easily consumable by a global user base. Incorporating live translations into the metaverse can enrich user experiences as well as cultivate new opportunities for businesses, creative minds, and users.

Proof-of-concept

To put these technologies to the test, we developed a proof-of-concept demonstrating the effectiveness of integrating live translations within a metaverse platform. With a small scope, our main requirement was to translate everything to English; this means that whatever language the speaker uses, the audio he listens to will be in English.

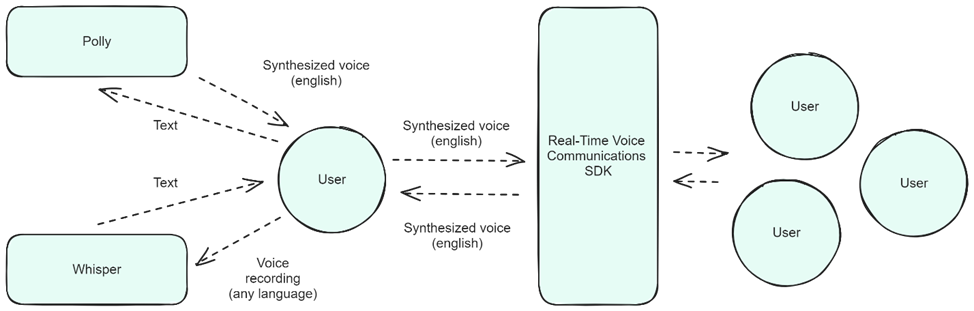

Speaking of scope, the architecture of the application was pretty simple. We went with OpenAI’s Whisper and Amazon Polly to transcribe and synthesize speech respectively, in addition to our other proof-of- concept, the Metaverse Gym.

Figure 1: A simplified view of the architecture

Here is a demo of the application where two users interact:

Challenges

While simultaneous translation is a low-hanging fruit in the metaverse context, that does not mean that it is an easy task. One of the earliest challenges you may encounter while implementing this feature is punctuation recognition. For example, how do you know that the user finished his phrase to translate it? Moreover, cultural nuances and implied meanings may get lost during language translation, which can lead to miscommunication.

Another challenge is maintaining the speaker's original emotions and intonations when translating voice. Emotional context plays a key role in oral communication. Thus, providing an AI-enhanced voice that accurately captures the speaker's feelings will be paramount to developing a truly immersive experience. There are many research papers on this topic, but we still lack products or services that can solve the issue.

Lastly, the delay in translation can also be an impediment. While technology is improving every day, real-time translation is rarely "real-time" and invariably involves some lag. Navigating ways to minimize this delay will be crucial in implementing an effective live translation feature.

Possible Improvements

From the user experience perspective, the biggest improvement for this proof-of-concept would be to also translate the audio that the player is hearing to his native language instead of English. This would create a full cycle of audio translation and significantly improve user interaction.

Conclusion

While the current metaverse experience has its unique appeal, the potential for further exploration and upliftment is truly untapped. Implementing technologies such as live translations, custom avatars, and haptic feedback along with VR and AR integration can exponentially elevate the metaverse experience by promoting inclusivity, enhancing non-verbal communication, and immersing users in intricately detailed virtual realms.

As technology evolves, the metaverse is poised to empower users to forge connections, collaborate, and express their individuality uninhibited by geography or language. By harnessing these modern technologies, we can empower a truly enlightened metaverse experience. The metaverse abounds with opportunities that are waiting to be mapped and treasured.

Key Takeaways

- The metaverse presents an innovative digital realm for global interaction, collaboration, and creativity. However, to be truly empowered, it needs to successfully integrate advanced technologies that make the interaction better or, at least, on par with real life.

- Language barriers present a significant challenge to seamless global interaction within the metaverse. Implementing AI-powered translation tools will improve communication and foster a more inclusive environment.

- As technology continues to evolve, the metaverse has the potential to become a transformative platform for connection, collaboration, and self-expression, unhindered by geographical or language barriers. Harnessing these emerging technologies is essential to fulfilling this potential and creating an empowered metaverse experience for all users.

Acknowledgment

This piece was written by João Pedro São Gregório Silva, a software development specialist at Encora. Thanks to João Caleffi for reviews and insights.

About Encora

Fast-growing tech companies partner with Encora to outsource product development and drive growth. Contact us to learn more about our software engineering capabilities.