Apple’s Vision Pro introduces a paradigm shift in how users will navigate immersive, virtual environments without physical, hand-held controllers. With Vision Pro, users can select, scroll, zoom, rotate, and move objects using hand gestures and eye movements, similar to multi-touch on the iPhone.

In 2017, Apple acquired SensoMotoric Instruments, a German computer vision applications company known for its real-time eye tracking technology. This technology is used in Vision Pro to reduce lag and prevent motion sickness.

Natural Controls: Using Hand Gestures and Eye Tracking on the Vision Pro

Hand gestures and eye tracking on visionOS operate in conjunction to enable fluid interaction. The eyes’ gaze serves as a mouse pointer, enabling users to choose UI elements just by looking at them. Users aim at the UI with their eyes to select and then make hand gestures to incite action, for example, tapping fingers together to click and flicking the wrist to scroll.

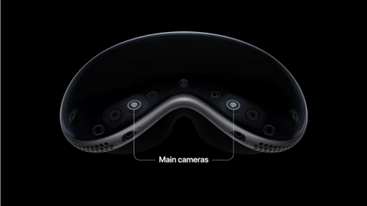

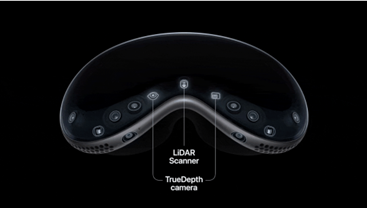

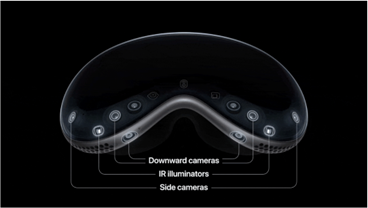

The Vision Pro has two front (main), two side, and four bottom-mounted cameras for hand tracking, as well as infrared sensors for eye tracking. The eye tracking system uses LEDs and infrared cameras to project invisible light patterns onto the eyes, providing precise input that allows users to select an item simply by looking at it and tapping their fingers together. The external cameras have a wide field of view, allowing users to make gestures comfortably with their hand resting on their lap.

Infrared cameras in the Vision Pro can track hand and finger movements, even in dark environments, without requiring users to hold their hands in front of the device.

The basic interactions with hand gestures are performed by pinching the index finger and thumb to expand applications, move applications, and scroll through applications. This action—pinching the fingers together—is the equivalent of pressing on the screen of a phone.

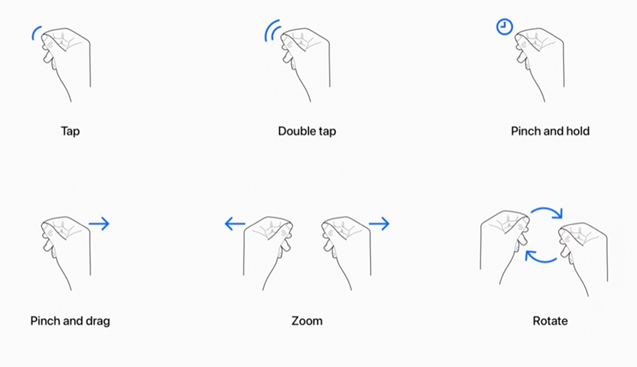

SwiftUI provides built-in support for these standard gestures:

- Tap to select

- Pinch to rotate and manipulate objects

- Double tap

- Pinch and hold

- Pinch and drag to scroll

There are also two-handed gestures to zoom and rotate.

By using ARKit, users can create custom gestures specific to the app. ARKit uses the person’s hand and finger positions as input for custom gestures and interactivity. The Happy Beam app on Vision Pro recognizes the central heart-shaped hand gesture by using ARKit’s support for 3D hand tracking in visionOS.

Users make a heart-shaped hand gesture to generate a projected beam during play.

The Hardware Enabling Natural Input on the Vision Pro

Apple uses a dual-chip design in Vision Pro. The first chip, M2, powers visionOS with computing and multitasking. Alongside M2, there is a new chip called R1, dedicated to a processing camera, sensor, microphone inputs, and streaming images to the display. By incorporating the R1 chip, Apple optimized the user experience. The chip processes input from the infrared cameras and motion sensors to actively track eye movements and hand gestures.

The R1 chip utilizes eye data to determine which screen elements to render and which to omit. Since the visual focus is limited, the chip intelligently renders only the portion within the gaze with sharp detail, conserving resources and enhancing both battery efficiency and immersive viewing. This is called Foveated Rendering.

The Vision Pro has a set of sensors which include 14 cameras on the inside (including 4 Infrared cameras); 10 cameras on the outside (consisting of 2 main, 4 downward, 2 TrueDepth, and 2 side cameras); 1LiDAR scanner; multiple infrared and invisible LED illuminators sensors; and 6 microphones.

Vision Pro: Apple’s Next Chapter in Intuitive, Immersive Technology

Representing a groundbreaking evolution in user interactivity, Vision Pro enables intuitive navigation of virtual worlds through Apple's seamless integration of hand gestures and eye tracking. Apple has not only incorporated standard gestures into Vision Pro but also provided the flexibility to create custom gestures by using ARKit, tailored to specific applications.

The hand and eye input system has the potential to accelerate the adoption of mixed reality and computer vision technologies in the enterprise sector. It's backed by powerful hardware, including the R1 chip. Foveated rendering, driven by the R1 chip, ensures that the device renders only the portion of the screen within the gaze area in sharp detail.

Apple's commitment to pushing the boundaries of technology and user experience is evident in the Vision Pro, setting the stage for a new era in immersive experiences and its related technology.

Note: Images are taken from the Apple developer blog and documentation

About Encora

Fast-growing tech companies partner with Encora to outsource product development and drive growth. Contact us to learn more about our software engineering capabilities.